Only a small percentage of people think of Charles Babbage and Ada Lovelace when they think of computers. Babbage conceived of a mechanical computer and Lovelace became the first programmer. Both were extraordinarily gifted mathematicians and their work underlies the modern world of computing. (In their time, a computer was actually the “operator of the computer”).

Of course, the first difference engine was composed of around 25,000 parts, weighed fifteen tons (13,600 kg), and stood 8 ft (2.4 m) high. (Reference: Wikipedia). The march of progress would quickly change computers from being massive mechanical machines into massive electronic machines; they’d still fill rooms and no-one would really want one for the home.

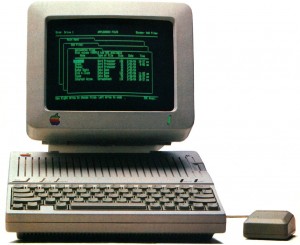

A few decades later and computers were still heavy, complex, static machines and no-one would really want one in their home. It took a serendipitous meeting in an equally serendipitous place to create the first personal computers. This generation had screens, keyboards and it would be possible (and even desirable) to have one at home.

>

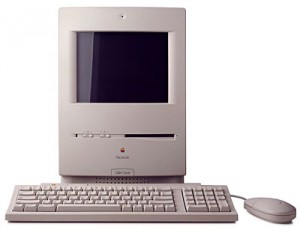

>But computers were still complex, still businessy and still a little stuffy. There were limits to what could be achieved with that generation and no-one seemed to be up to the challenge of making computers even better. We were stuck in the Bronze Age of computing. It took another set of serendipitous circumstances. A decade later and there was another breakthrough, another generation was born.

Now computers were ‘friendlier’, a new paradigm had been invented and everyone copied it. The only problem was that as everyone copied they neglected to innovate and computers didn’t change. We were stuck again as the variations seemed to be more about adding different varieties of eye candy. One thing became certain – the newer graphic user interfaces made computers easier to understand, made it easier for non-technical individuals to grasp computing concepts. However – we were stuck in this Silver Age for twenty five years. Whether you used a Mac, the derivative Windows or Linux (which modelled almost all of it’s user interface elements on Windows or the Mac), you were using an interface which was first released to the public in 1984.

So, I’m obviously angling that the iPad is the third generation of Personal Computer, that it ushers in a new Golden Age of computing. And I really believe this. Apple tried it back in the 90s with the Newton – and if you don’t think the Newton was insanely great then you obviously never used one.

It’s true the iPad removes most of the OS from the end user. But is this a bad thing?

If you’re like me you spend a lot of time with the operating system of a computer. I can always find something to fiddle with, something to pay attention to with just the basic OS. With the iPhone (and by extension, the iPad), I can’t do too much other than flick between screens. This is not a bad thing. It’s going to be all about the software.

While there’s a lot of attention on the iPhone towards apps like WeightBot – apps which do one simple thing really well – we’re going to see a whole plethora of new apps which do one complex thing really well on the iPad. We have seen Pages, Numbers, Keynote on iPad and it’s only a matter of time before we see apps like Soulver, Coda, OmniGraffle and even iMovie.

We’ll only see one thing at a time on the screen and again, that’s no bad thing. We can concentrate on the task at hand. (Yes, I believe Apple is going to give us the ability to run certain AppStore-authorised third-party background processes soon so we can run location apps, Spotify and other ‘essentials’) but it will be a task oriented computer. And if Apple released a version of Xcode for iPad, would there be the same debate?

I can’t wait.

(Inspired by Mike Cane’s post regarding Jef Raskin being the father of the iPad)

And even back then in 1979, Raskin saw very far ahead:

The third generation personal computers will be self-contained, complete, and essentially un-expandable.

I agree to a large extent with you. I do think that the iPad is more a representative of things to come.

It’s the future present in a particular form, primarily casual, a companion computer to a main computer. It’ll fill in while you are away from your desk and it’ll be your on the sofa computer.

For me, things will have to evolve a lot for devices like this to emulate the robustness of certain applications and workflows. Editing feature films or major database management. But I have no doubt that day will come.

Hi Tommy,

I think that with the ‘cloud’, we’ll see more robust video, DBMS-style apps. iPad is a capable computing device but it doesn’t have to be more than a fabulous UI for very complex hybrid local/cloud software.